In one of the good parts of the very mixed bag that is "

Lo and Behold:

Reveries of the Connected World",

Werner Herzog asks his interviewees what the Internet might dream of, if it

could dream.

The best answer he gets is along the lines of: The Internet of before

dreamed a dream of the World Wide Web. It dreamed some nodes were

servers, and some were clients. And that dream became current reality,

because that's the essence of the Internet.

Three years ago, it seemed like perhaps another dream was developing

post-Snowden, of dissolving the distinction between clients and servers,

connecting peer-to-peer using addresses that are also cryptographic public

keys, so authentication and encryption and authorization are built in.

Telehash is one hopeful attempt at this, others include snow, cjdns, i2p,

etc. So far, none of them seem to have developed into a widely

used network, although any of them still might get there. There are a lot

of technical challenges due to the current Internet dream/nightmare, where

the peers on the edges have multiple barriers to connecting to other

peers.

But, one project has developed something similar to the new dream,

almost as a side effect of its main goals:

Tor's onion services.

I'd wanted to use such a thing in

git-annex,

for peer-to-peer sharing and syncing of git-annex repositories. On November

13th, I started building it, using Tor, and I'm releasing it concurrently with

this blog post.

git-annex's Tor support replaces its old hack of tunneling git

protocol over XMPP. That hack was unreliable (it needed a TCP on top of XMPP

layer) but worse, the XMPP server could see all the data being transferred.

And, there are fewer large XMPP servers these days, so fewer users could

use it at all. If you were using XMPP with git-annex, you'll need to switch

to either Tor or a server accessed via ssh.

Now git-annex can serve a repository as a Tor onion service, and that can

then be accessed as a git remote, using an url like

tor-annex::tungqmfb62z3qirc.onion:42913. All the regular git, and

git-annex commands, can be used with such a remote.

Tor has a lot of goals for protecting anonymity and privacy. But the

important things for this project are just that it has end-to-end

encryption, with addresses that are public keys, and allows P2P

connections. Building an anonymous file exchange on top of Tor is not my

goal -- if you want that, you probably don't want to be exchanging git

histories that record every edit to the file and expose your real name by

default.

Building this was not without its difficulties.

Tor onion services were originally intended to run hidden websites,

not to connect peers to peers, and this kind of shows..

Tor does not cater to end users setting up lots of Onion services.

Either root has to edit the

torrc file, or the Tor control port can be

used to ask it to set one up. But, the control port is not enabled by

default, so you still need to su to root to enable it. Also, it's difficult

to find a place to put the hidden service's unix socket file that's

writable by a non-root user. So I had to code around this, with a

git

annex enable-tor that su's to root and sets it all up for you.

One interesting detail about the implementation of the P2P protocol in

git-annex is that it uses two Free monads to build up actions. There's a Net

monad which can be used to send and receive protocol messages, and a Local

monad which allows only the necessary modifications to files on disk.

Interpreters for Free monad actions can chose exactly which actions to

allow for security reasons.

For example, before a peer has authenticated,

the P2P protocol is being run by an interpreter that refuses to run any

Local actions whatsoever. Other interpreters for the Net monad could be

used to support other network transports than Tor.

When two peers are connected over Tor, one knows it's talking to the

owner of a particular onion address, but the other peer knows nothing about

who's talking to it, by design. This makes authentication harder than it

would be in a P2P system with a design like Telehash.

So git-annex does its own authentication on top of Tor.

With authentication, users would need to exchange absurdly long

addresses (over 150 characters) to connect their repositories. One very

convenient thing about using XMPP was that a user would have connections to

their friend's accounts, so it was easy to share with them. Exchanging long

addresses is too hard.

This is where

Magic Wormhole

saved the day. It's a very elegant way to get any two peers in touch

with each other, and the users only have to exchange a short code

phrase, like "2-mango-delight", which can only be used once. Magic Wormhole

makes some security tradeoffs for this simplicity. It's got vulnerabilities

to DOS attacks, and its MITM resistance could be improved. But I'm lucky

it came along just in time.

So, it takes only installing Tor and Magic Wormhole,

running two git-annex commands, and exchanging short code phrases with

a friend, perhaps over the phone or in an encrypted email, to get

your git-annex repositories connected and syncing over Tor.

See the documentation

for details. Also, the git-annex webapp allows

setting the same thing up point-and-click style.

The Tor project blog has throughout December been

featuring all kinds of

projects that are using Tor.

Consider this a late bonus addition to that. ;)

I hope that Tor onion services will continue to develop to make them easier

to use for peer-to-peer systems. We can still dream a better Internet.

This work was made possible by all my supporters on

Patreon.

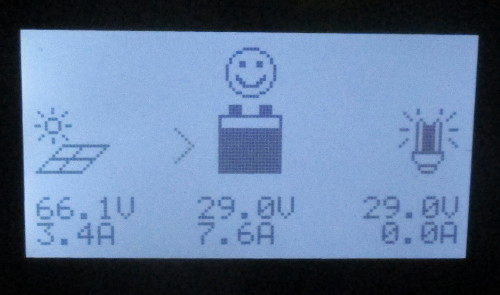

Success! I received the Tracer4215BN charge controller where UPS

accidentially-on-purpose delivered it to a neighbor, and got it connected

up, and the battery bank rewired to 24V in a couple hours.

Success! I received the Tracer4215BN charge controller where UPS

accidentially-on-purpose delivered it to a neighbor, and got it connected

up, and the battery bank rewired to 24V in a couple hours.

Here it's charging the batteries at 220 watts, and that picture was taken

at 5 pm, when the light hits the panels at nearly a 90 degree angle.

Compare with the old panels, where the maximum I ever recorded at high noon

was 90 watts. I've made more power since 4:30 pm than I used to be able to

make in a day! \o/

Here it's charging the batteries at 220 watts, and that picture was taken

at 5 pm, when the light hits the panels at nearly a 90 degree angle.

Compare with the old panels, where the maximum I ever recorded at high noon

was 90 watts. I've made more power since 4:30 pm than I used to be able to

make in a day! \o/